Technohooks

CoT Announcements

Next Monthly Tech Talk on Tuesday, 11/07/23. Join our monthly tech talks to discuss current events, articles, books, podcast, or whatever we choose related to technology and education. There is no agenda or schedule. Our next Tech Talk will be on Tuesday, November 7th, 2023 at 8-9pm EST/7-8pm CST/6-7pm MST/5-6pm PST. Learn more on our Events page and register to participate.

Next Book Club on Tuesday, 12/17/23: We are discu\ssing Blood in the Machine The Origins of the Rebellion Against Big Tech by Brian Merchant led by Dan Krutka. Register for this book club event if you’d like to participate.

Critical Tech Study Participation: If you self-identify as critical of technology, please consider participating in our study. We are seeking participants who self-identify as holding critical views toward technology to share their stories by answering the following questions: To you, what does it mean to take a critical perspective toward technology? How have you come to take on that critical perspective? Please consider participating in our study via OUR SURVEY. You are welcome to share with others. Thank you!

by Dan Krutka and Marie Heath with Jacob Pleasants

Earlier this week we (Marie and Dan) had the privilege to meet with students, deliver a keynote, and teach in classes at Elon University in North Carolina at the invitation of our friend Jeff Carpenter and their Teaching Fellows program. Our sessions centered on our Civics of Technology work. Our keynote focused on “Asking Technoskeptical Questions about Generative AI.” One of the challenges of such a talk is helping students—in this case teacher candidates—grasp the depth of the social problems that we’ve learned about over time concerning discriminatory design, algorithmic oppression, and unintended effects from Ruha Benjamin, Safiya Umoja Noble, Meredith Broussard, Virginia Eubanks, Cathy O’Neil, Nicholas Carr and so many other exceptional scholars.

Whenever we are able to assign homework, we often assign the 2020 documentary Coded Bias directed by Shalini Kantayya and Neil Postman’s short 1998 talk, “Five things we need to know about technological change.” The former source confronts technological inequities and the latter helps students see that the effects of technological change are often collateral, unintended, and disproportionate. However, we aren’t always able to ask students to watch and read these sources. Therefore, we’ve generally relied on illustrative examples, or what we’re calling technohooks here, to help students see technological problems.

In our keynote, we drew on the example of a Racist Soap Dispenser at Facebook offices. Chukwuemeka Afigbo, Facebook’s head of platform partnerships in the Middle East and Africa, shared the video on Twitter. In the video, the soap dispenser cannot “see” a Black man’s hand, and therefore refuses to dispense soap. He then covers his palm with a white paper towel and places it under the dispenser. The dispenser “sees” the white coloring and dispenses the soap. Afigbo noted “If you have ever had a problem grasping the importance of diversity in tech and its impact on society, watch this video.” The purpose of this example is to help students quickly see how tech can be embedded with bias and work in unintended ways.

While the talk went well, we did wonder whether we were able to help students understand the nature of the problem in such a short time. Did students view the racist soap dispenser as an illustrative example of systemic problems, or did they dismiss it as an isolated glitch that could be easily fixed? While we’ve used different examples over time, we realized that we might benefit from gathering them together so we could choose among them when needing a hook that might help students better understand the phenomenon. Therefore, we have added some other examples we use here, and we will use these to create a page on our Curriculum site where we’ll add more.

Please share any “technohooks” you may have with us and we’ll add them to the collection.

A Few More Technohooks

For each of the following technohooks, we generally recommend that educators provide students an opportunity to view the hook with questions such as: What do you notice? What do you wonder? Allowing students to discuss what they notice may uncover prior experiences they’ve had with technologies. Of course, educators should educate themselves on the issues underlying each example. In this post, we largely just provide the sources.

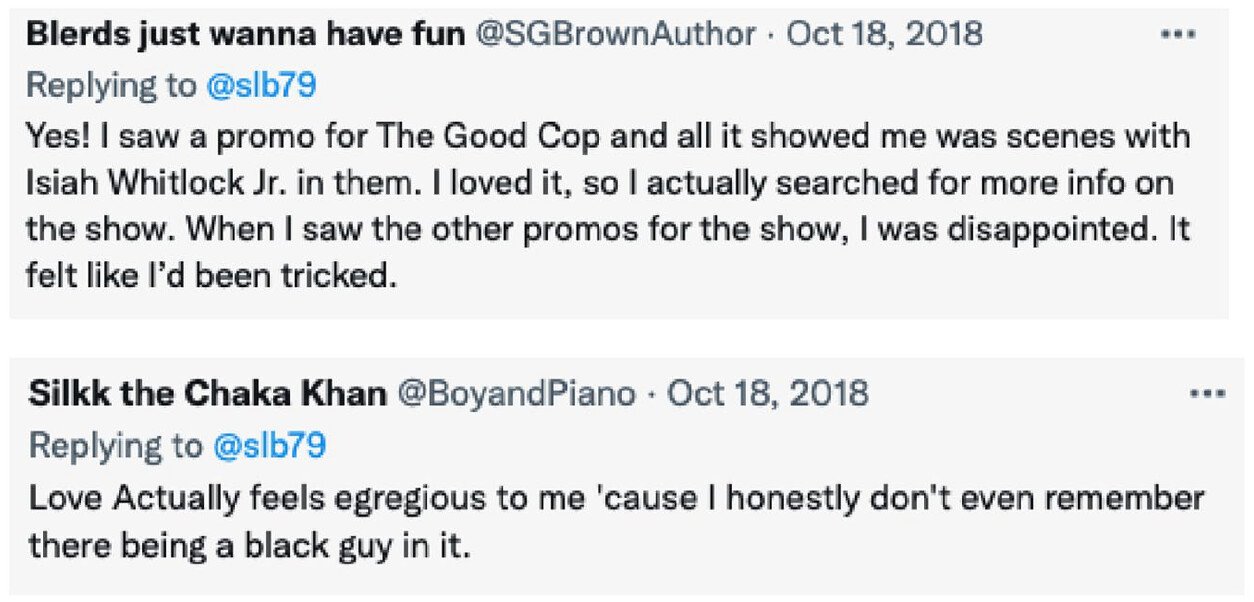

Hook: “The Algorithm Knows I’m Black”

Original Tweet by Stacia Brown

Tweet replies

Source: Meyerend, D. (2023). The algorithm knows I’m Black: from users to subjects. Media, Culture & Society, 45(3), 629-645.

Hook: Increased body searches for trans people at airports

Sources: Waldron, L. & Medina, B. (2019, August 26th). When transgender travelers walk into scanners, invasive searches sometimes wait on the other side. ProPublica.

Costanza-Chock, S. (2020). Introduction: #TravelingWhileTrans, design justice, and escape from the Matrix of Domination, in Design Justice: Community-Led Practices to Build the Worlds We Need. MIT Press.

Hook: Apple Card Offers Lower Credit Limits to Women

Sources: Knight, W., (2019, November 19). The Apple Card didn't 'see' gender—and that's the problem: The way its algorithm determines credit lines makes the risk of bias more acute. Wired.

Hamilton, I. A. (2019, November 11). Apple cofounder Steve Wozniak says Apple Card offered his wife a lower credit limit. Insider.

Hook: Discriminatory design in park benches

Screenshot from Benjamin (2016)

Screenshot from Benjamin (2016)

Screenshot from Benjamin (2016)

Source: Benjamin, R. (2016, September 6). Set phasers to love me: Incubate a better World in the Minds and Hearts of Students [YouTube Video]. ISTE.

Hook: Predictive policing disproportionately targets Black and Latinx neighborhoods

Source: Sankin, A., Mehrota, D., Mattu, S., & Gilbertson, A. (2021, December 2). Crime prediction software promised to be free of biases. New data shows it perpetuates them. Millions of crime predictions left on an unsecured server show PredPol mostly avoided Whiter neighborhoods, targeted Black and Latino neighborhoods. Markup and Gizmodo.

Hook: Pedestrian deaths, and the disproportionate effect on marginalized communities.

According to the Governors Highway Safety Association 2022 report, pedestrian deaths have been growing at an alarming rate. Unlike most economically developed countries around the world, our transportation system is becoming less safe rather than more safe for pedestrians. Worse yet, pedestrian deaths disproportionately occur to individuals who are low-income and non-white. Why?

Background: The guest describes (transcript) multiple causes of the problem, but two that are of particular interest from a Civics of Technology perspective are:

Cars are designed for the safety of passengers, not people outside the car. The safety ratings that cars receive only care about the people inside the car.

Roads are designed for the movement of autos, not for the movement or safety of anyone else. Most pedestrian deaths occur on roads that are essentially “highways” cutting through places where people need to be able to walk.

These, of course, are human choices that could have been made differently. There is no reason that we have to design our cars and roads this way.

Source: What Next: TBD (2023, July 26). America’s killer car problem [podcast]. Slate.

Hook: Arriving at school in USA and Netherlands

Source: u/Monsieur_Triporteur. Arriving at school, USA vs Netherlands. Reddit.